|

SHANGHAI, July 18, 2023 /PRNewswire/ -- SenseTime, a leading global artificial intelligence (AI) software company, has announced the introduction of its trustworthy AI toolbox, "SenseTrust", following the successful launch of its upgraded "SenseNova" foundation model sets at the World Artificial Intelligence Conference (WAIC) 2023. "SenseTrust" encompasses a comprehensive suite of trustworthy AI governance tools that cover data processing, model evaluation, and application governance. This toolbox provides ethics and security evaluation, and reinforcement solutions for SenseTime and the industry, promoting the development of a safe and trustworthy AI ecosystem.

Zhang Wang, Vice President and Chairman of the Al Ethics and Governance Committee of SenseTime stated, "The widespread adoption of AI is fuelled by affordable and versatile technology, but ensuring systems have reliable security is a crucial component for large-scale implementation. While high computing power and general AI have accelerated the technology's deployment, they have also heightened the risk of technology misuse, making security and reliability urgent concerns. SenseTime addresses these challenges with its trustworthy AI toolbox, 'SenseTrust', which covers the entire AI development life cycle, from data processing to model development and application deployment. 'SenseTrust' aims to assist in building reliable large models by reducing the risk characteristics of AI in the new era."

During the WAIC, SenseTime also released the "SenseTime Large Model Ethics Principles and Practices Whitepaper", an annual report on AI ethics and governance co-written by SenseTime Intelligent Research Institute and the Shanghai Jiao Tong University Center for Computational Law and AI Ethics Research. The whitepaper focuses on the development of large models and the governance of generative AI. It presents the latest perspectives on AI governance, using the governance practices of the "SenseNova" foundation model sets as a case study.

"SenseTrust" creates a safety net for trustworthy AI

The AI production paradigm, featuring a combination of foundation models and fine-tuning processes, has significantly reduced development costs and application thresholds in the era of large models. However, with an increasing number of tasks, multi-modality, and a wider range of applications, the exponential growth of technology misuse and risk sources has made it increasingly difficult to define risk assessment metrics, potentially leading to a large-scale spread of risks. At the application level, risks such as AI hallucinations, data poisoning, adversarial attacks, and goal hijacking have emerged one after another, arousing global attention to the challenges of managing AI risks.

As a leading AI software company, SenseTime has always attached great importance to AI ethics and governance, emphasizing both technological innovation and governance development. As early as 2019, SenseTime launched two forward-looking initiatives, one on large model research and development and the other on AI ethics and governance. Prior to this, SenseTime had already established a comprehensive AI ethics and governance framework that includes an organizational structure, management system, review mechanism, tool system, ethics capacity building program, and stakeholder networks, which has been widely acknowledged by the industry.

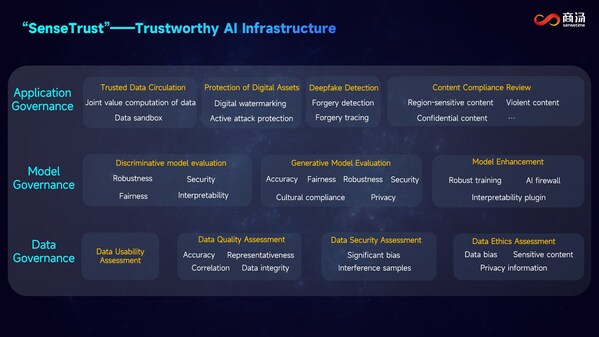

As technology continues to rapidly evolve and applications become more diverse, it is crucial to elevate AI governance to effectively respond to the fast-growing risk concerns. To address the risk dynamics of generative AI, SenseTime has integrated long-term ethical governance thinking and technical capabilities to create a trustworthy AI toolbox known as "SenseTrust". "SenseTrsut" provides a comprehensive "toolbox" for evaluating and reinforcing trustworthy AI, including technical tools for data governance, model governance, and application governance.

At the data level, "SenseTrust" offers data governance tools such as anonymization, decontamination, compliance review, and bias evaluation. For example, the data decontamination tool can detect toxic data with backdoors or disturbances in the data source during model training and provide decontamination solutions, achieving effective decontamination with a detection rate of over 95%. The data anonymization tool has been used in various scenarios such as data annotation, urban management, and autonomous driving-related businesses. At the model level, "SenseTrust" provides standardized and customized model evaluation capabilities for discriminative and generative models. The platform offers one-click evaluation for discriminative model such as live recognition, image classification, and target detection. For generative models, "SenseTrust" has created a large test dataset covering over 30 ethics and security evaluation dimensions, including adversarial security, robust security, backdoor security, interpretability, and fairness. To enhance the risk defence capabilities of models, "SenseTrust" provides an "AI firewall" that filters adversarial samples from the source, with a detection rate of up to 98%. At the application level, SenseTime's expertise in data protection, digital forensics, and forgery detection has led to the development of integrated solutions for generation, authentication, and traceability. For example, "SenseTrust" has developed a digital watermarking solution to address AIGC-related certainty tracing and authentication issues. The solution can embed specific information into digital carriers without affecting their value or being easily detectable. The information supports multimodal data and can only be extracted through a specific decoder with an exclusive key. "SenseTrust's" digital watermarking technology has been applied in various products, including "SenseMirage" and "SenseAvatar", and to customers in content creation and big data.At this year's WAIC, SenseTime unveiled a significant upgrade to its "SenseNova" Foundation Model Sets, integrating its "SenseTrust" tool system to evaluate and enhance risk defence capabilities for both generative and discriminative AI models.

Furthermore, SenseTime is sharing its large model and generative AI governance practices. For instance, its "SenseTrust" solution for comprehensive forgery detection has been integrated into the security systems of more than ten banks, achieving a success rate of over 20% higher than the industry average in intercepting various attacks and preventing network fraud such as identity theft and payment fraud. Additionally, SenseTime has made related technologies such as model inspection, digital watermarking, and proactive protection against poisoning attacks available through its "Open Platform on AI Security and Governance".

About SenseTime

SenseTime is a leading AI software company focused on creating a better AI-empowered future through innovation. We are committed to advancing the state of the art in AI research, developing scalable and affordable AI software platforms that benefit businesses, people and society as a whole, while attracting and nurturing top talents to shape the future together.

With our roots in the academic world, we invest in our original and cutting-edge research that allows us to offer and continuously improve industry-leading AI capabilities in universal multimodal and multi-task models, covering key fields across perception intelligence, natural language processing, decision intelligence, AI-enabled content generation, as well as key capabilities in AI chips, sensors and computing infrastructure. Our proprietary AI infrastructure, SenseCore, integrates computing power, algorithms, and platforms, enabling us to build the "SenseNova" foundation model sets and R&D system that unlocks the ability to perform general AI tasks at low cost and with high efficiency. Our technologies are trusted by customers and partners in many industry verticals including Smart Business, Smart City, Smart Life and Smart Auto.

SenseTime has been actively involved in the development of national and international industry standards on data security, privacy protection, ethical and sustainable AI, working closely with multiple domestic and multilateral institutions on ethical and sustainable AI development. SenseTime was the only AI company in Asia to have its Code of Ethics for AI Sustainable Development selected by the United Nations as one of the key publication references in the United Nations Resource Guide on AI Strategies published in June 2021.

SenseTime Group Inc. (stock code: 0020.HK) has successfully listed on the Main Board of the Stock Exchange of Hong Kong Limited (HKEX). We have offices in markets including Hong Kong, Mainland China, Taiwan, Macau, Japan, Singapore, Saudi Arabia, the United Arab Emirates, Malaysia and South Korea, etc., as well as presences in Germany, Thailand, Indonesia and the Philippines. For more information, please visit SenseTime's website as well as its LinkedIn, Twitter, Facebook and YouTube pages.

Media Contact

SenseTime Group Limited

Email: pr@sensetime.com

1 year ago

391

1 year ago

391

English (United States)

English (United States)