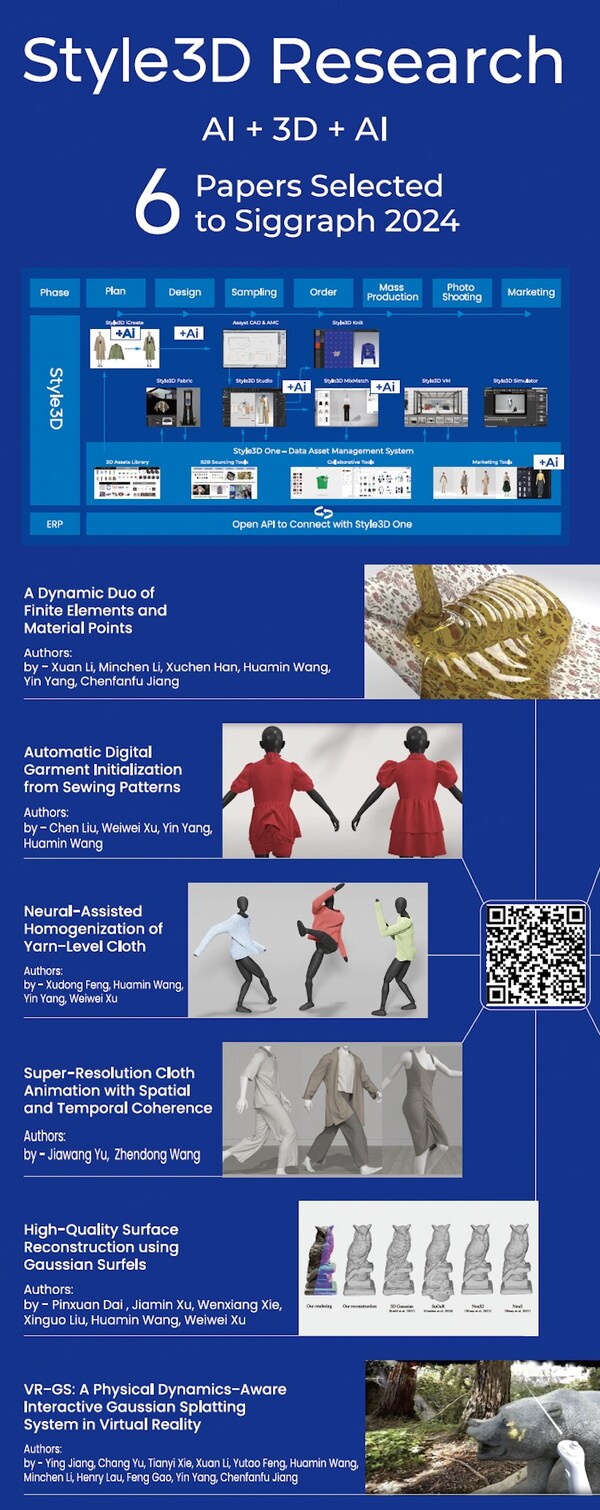

DENVER, July 23, 2024 /PRNewswire/ -- Style3D, a trailblazing force in the fashion technology industry, is thrilled to announce that an impressive six of its research papers have been accepted for presentation at the coming ACM SIGGRAPH 2024 conference. This remarkable achievement cements Style3D's position as a visionary leader in the field, showcasing its revolutionary research and innovative solutions that are catapulting the fashion industry into the future.

The papers, which masterfully intertwine graphics and AI technologies, cover a dazzling array of topics, from elevating multi-material high-fidelity effects, automating processes using AI to enhancing 3D effects, and interactions in AR/MR environments.

The papers, which masterfully intertwine graphics and AI technologies, cover a dazzling array of topics, from elevating multi-material high-fidelity effects, automating processes using AI to enhancing 3D effects, and interactions in AR/MR environments.

These groundbreaking advancements span the entire fashion industry pipeline, from front-end design to back-end marketing and display. Excitingly, all these cutting edge research findings will be seamlessly integrated into Style3D's comprehensive suite of tools, ensuring that the industry can reap the benefits of these game-changing innovations.

Below are interpretations of six papers:

A Dynamic Duo of Finite Elements and Material PointsThis paper introduces a novel approach integrating the Finite Element Method (FEM) and the Material Point Method (MPM) to enhance the simulation of multi material systems.

This research lays the foundation for future studies in multi-material simulation, with potential applications in simulating protective gear in extreme environments such as sandy or snowy terrains. Automatic Digital Garment Initialization from Sewing Patterns

With the rapid development of digital fashion and generative AI technologies, there is a need for an automatic method to transform digital sewing patterns into well-fitting garments on mannequins. In this work, Style3D addresses this issue by employing AI classification, heuristic methods, and numerical optimization, ultimately developing an innovative automatic garment initialization system. This system has been partially integrated into Style3D's products and is expected to further alleviate the workload of technical designers in creating digital garments. Neural-Assisted Homogenization of Yarn-Level Cloth

Real-world fabrics, composed of yarns and fibers, exhibit complex stress-strain relationships, posing significant challenges for homogenization using continuum-based models for rapid simulation. In this paper, Style3D introduces a neural homogenization constitutive model specifically designed for simulating yarn-level fabrics. Style3D is integrating this model into its product matrix, aiming to improve the accuracy of knitted fabric simulations in future applications. Super-Resolution Cloth Animation with Spatial and Temporal Coherence

Creating super resolution cloth animations that add fine wrinkle details to coarse cloth meshes requires maintaining spatial consistency and temporal coherence across frames. In this paper, Style3D presents an AI framework designed to address these issues, featuring a simulation correction module and a mesh-based super resolution module. This research enhances 3D detail effects through AI on top of 3D simulations and is expected to prove valuable in Style3D products with real-time simulation capabilities, such as Style3D MixMatch. High-Quality Surface Reconstruction using Gaussian Surfers

Style3D introduces a novel point-based representation—Gaussian surfels—which combines the flexible optimization capabilities of 3D Gaussian points with the surface alignment properties of surfels. Experimental results demonstrate that Style3D's method significantly improves surface reconstruction performance and shows potential for directly reconstructing digital garments from handheld devices. VR-GS: A Physical Dynamics-Aware Interactive Gaussian Splatting System in Virtual Reality

In this work, Style3D introduces the VR GS system, designed to provide a seamless and intuitive experience for human-centric 3D content interaction in virtual reality. This system is integrated with Style3D's internal real time simulation engine, Style3D Simulator, and is expected to greatly enhance human interaction with digital garments in AR/MR environments.

Through its "AI+3D+AI" technology powered value chain, Style3D is spearheading a revolution in the fashion design and development process, enhancing the connectivity and collaboration efficiency across the entire industry chain. By turbocharging the "Concept-to-Consumer" transformation, Style3D is propelling the fashion industry into a new era of intelligence and efficiency, leaving onlookers in awe of its relentless pursuit of innovation.

Style3D will take center stage at the SIGGRAPH 2024 exhibition (Style3D booth number: 619) from July 30th to August 1st at the Colorado Convention Center. Visitors will be treated to an exhilarating showcase of Style3D's latest innovations, offering an exclusive opportunity to witness firsthand the future of fashion technology.

For any media inquiry, please contact: marketing@style3d.com

About Style3D

Established in 2015, Style3D specializes in high-fidelity, realistic cloth simulation for the fashion and entertainment industries. Style3D offers end-to-end solutions aimed at enhancing modeling efficiency and ensuring superior animation quality. To date, over 1,000 companies worldwide have integrated Style3D products into their workflows. With presentations at SIGGRAPH and ongoing research, Style3D is committed to delivering cutting-edge results and AI-driven tools to optimize efficiency and visual effects.

8 months ago

227

8 months ago

227

English (United States)

English (United States)